Exactly one year ago, on September 9th, 2022, Extra Horizon N.V. was born as a spin-off out of the womb of Qompium [FibriCheck]. After spending […]

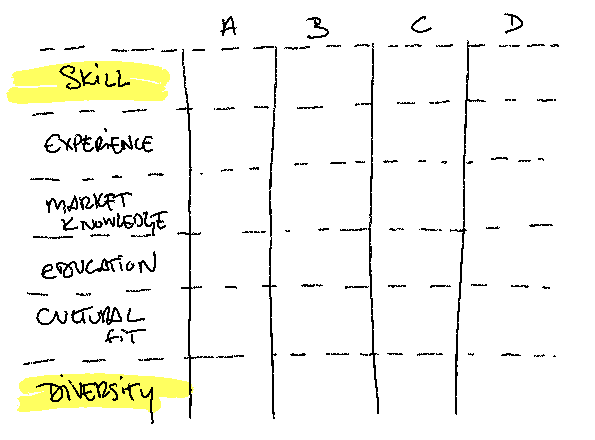

Continue readingDiversity vs Merit in the hiring process

CEO’s who say that Diversity should not override Merit in the hiring process, like Frank Slootman (Snowflake) in this Bloomberg interview, are spreading the wrong […]

Continue readingSTOP measuring EVERYTHING!

Have you also noticed how QUANTITATIVE our world has become? Common sense has been pushed to the back in favor of “objectification”. Don’t get me […]

Continue readingThink like a product manager regardless of your job title.

This tweet from one of my besties in the tech space is the inspiration for this post. As a product manager, I have of course […]

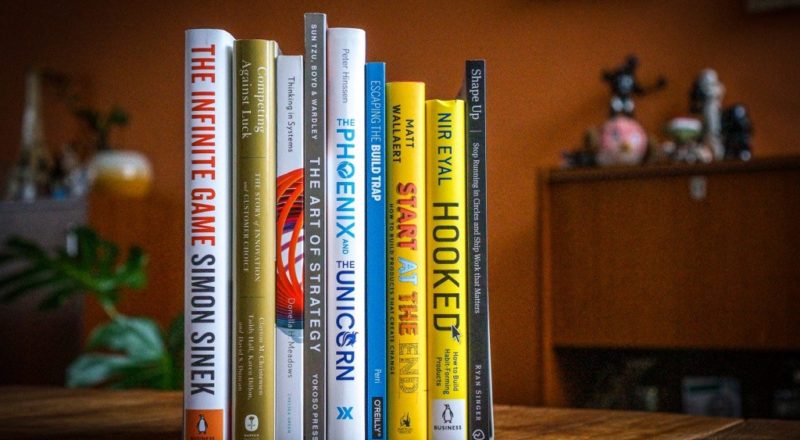

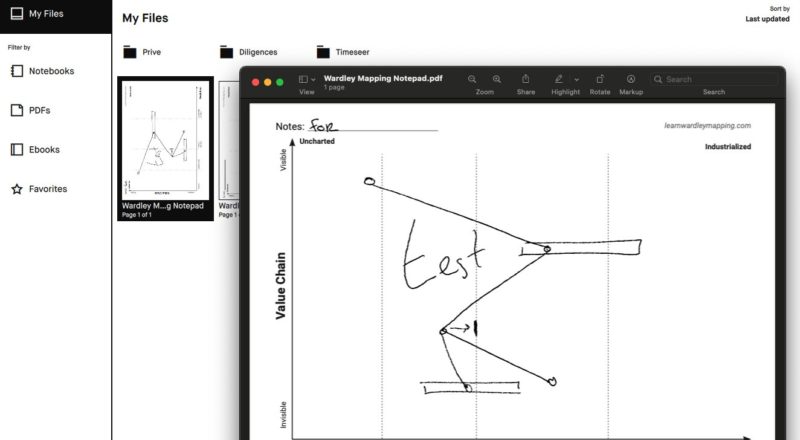

Continue readingHow I’m Using the reMarkable2

Step 1: organization Maybe the biggest difference and reason why I bought the reMarkable is the ability to scribble as you’d do on an A5 […]

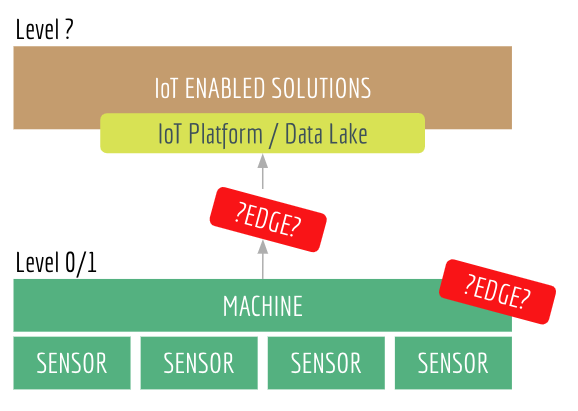

Continue readingWhere's the EDGE?

Last week I wrote/ranted about the current (missing) level of Device (Machine) Lifecycle Management in IoT Platforms. This week I am sharing another aspect of […]

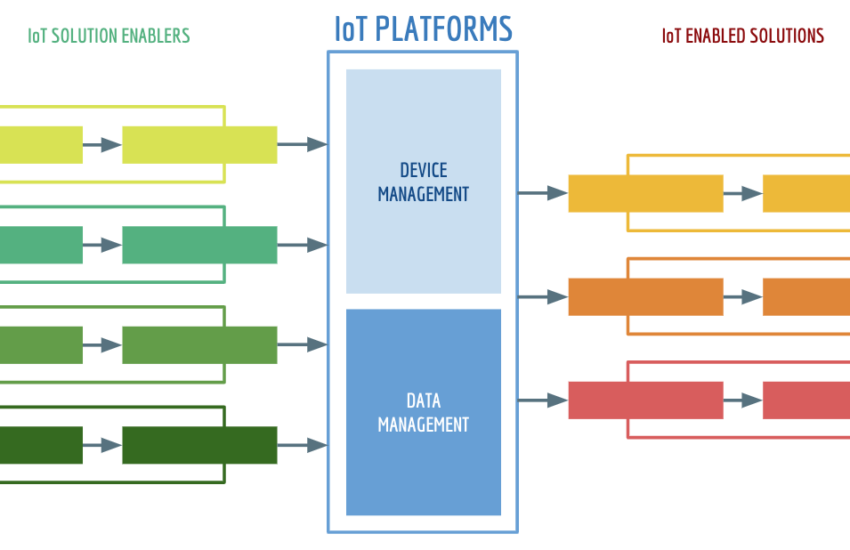

Continue readingClosing the GAP in the IoT Platform Play

This post has been brewing in my head for over a year already and it’s finally ready to come out. This post is also the […]

Continue readingThe rise of the multi-domain expert

The dominance hierarchy The basic premise of any dominance hierarchy is that a minority of people is going to be fantastically successful and a very […]

Continue readingYour unique selling points are unique because your competition allows that

Thought I’d kick it off with a little thought provoking title. Not just as clickbait because I truly believe in that statement but more about […]

Continue readingCustomer Support procedures are the basics for Customer Success

There’s two types of blogposts I write; sharing experience I have been chewing on for a long time or a sudden rant about an annoyance. […]

Continue readingMaking the case for permissioned BlockChain technologies

Earlier this year I joined Gospel Technology in London as its VP Product Strategy and it’s about time I start sharing some of the goodness […]

Continue readingMy colleague has AD(H)D – what now?

I recently got the following request from a close friend: “Do you have any advice for managing employees with ADHD? I’ve just had a chat […]

Continue reading