This post makes more sense if you know the background. Therefore please read this Reddit post where an early adopter had an issue with VSAN. Basically after a node failure in a 3-node configuration, the rebuild to a 4th node brought the whole cluster to its knees. Important to note: there was absolutely no data loss, just a whole environment that went down while rebuilding. For my take on the reasons and a few design tips, go to end of post 🙂

One of the comments I and a lot of other people had was that a 4-node cluster would have helped this man because of the n+2 resiliency. After thinking about this it actually doesn’t really make sense. The only thing an extra node gives you is more working nodes in a cluster when you need to rebuild.

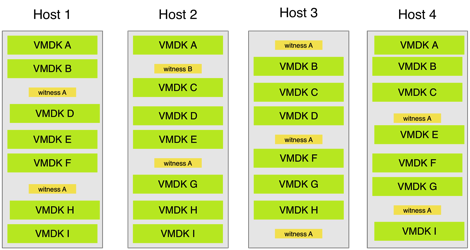

Rebuild Examples:

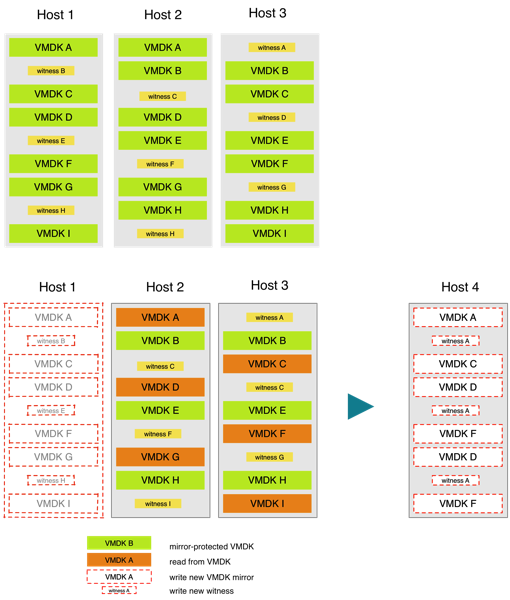

Allow me to explain by example: in this case we have a nicely spread 3-node cluster with 2way mirror protection.

What was the impact of a node failure? 6 full VMDKs that were no longer mirror protected. So we had to read 6 full VMDKs on the left-over hosts, write them to a new host and there were also 3 witness files impacted. These last ones can be neglected for the case of performance as they are only ~2MB files. The impact however is EXACTLY the same if you are redistributing the lost data to existing hosts or to a new/spare host. In the following case we will only have 5 VMDKs that are impacted but redistributing to the other nodes in the cluster or to an extra node is the same amount of reads/writes.

What is the impact of 1 node failure?

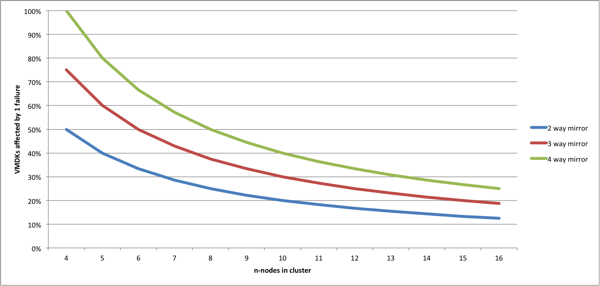

Protection level / hosts = storage impacted

Example: 100 VMDKs x 2way protection / 3 hosts = 66% of the VMDKs are impacted and need a new mirror to be fully protected again. When we put this in a graph, you get the following:

So why bother with n+2?

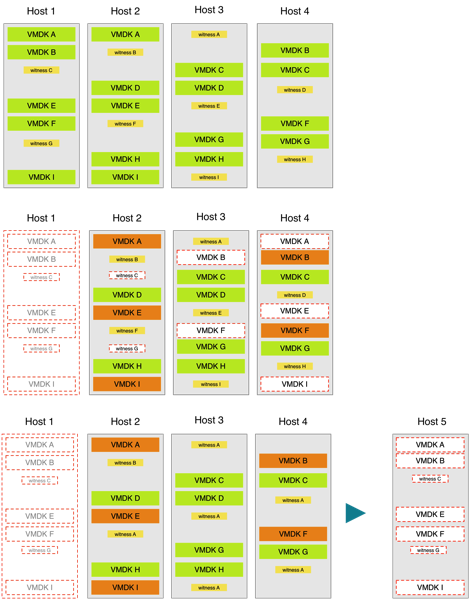

For maintenance! When you go into maintenance mode you can migrate your data to the other hosts in the cluster if there is enough available space and if the failover protection chosen is available. For this you’d need 4 hosts in a 2way protection. This is what it would have looked like:

You will notice that this has the exact same outlook as the failure had. And it also has the exact same impact on read/writes. So what is the difference? The difference is that while migrating to maintenance mode, your protection level is not impacted. You will have your data safely mirrored at all times available, still being able to have a hardware failure if it would occur.

What if I don’t want this?

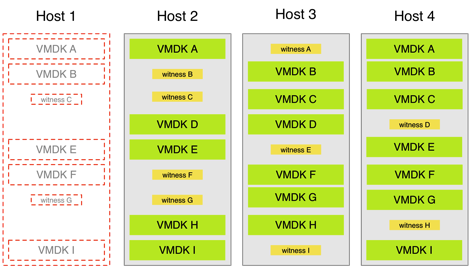

If you want hardly any impact on read/writes AND you want hardware failure protection at all times, a 3-way mirror is your only option! In this last example we have a 3-way mirror well distributed over 4 hosts. When any of these hosts needs to go down, we have a policy issues with 75% of our VMDKs but they would still ALL have a 2way mirror working.

PITFALLS!

- A lot depends on whether or not you get done what you need to within 1 hour time! Even if you have a 3-way mirror which gives you that hardware resiliency in case of an emergency, it would still start rebuilding that 3rd copy after that 1 hour grace time.

- Hard to believe but in this last case you can’t put your machine in maintenance as that would still initiate that redistribution …

My $0.02

- Migrating 1/3rd of a distributed storage stack has a far greater impact on your infrastructure than using vmotion to move the memory state of some VMs! Make sure that your hardware design is up for the task! It’s not because Windows 8.1 can run on 1Ghz/1GB that you should. In the same way some parts of the VSAN HCL are probably not the best for a production environment. Please read this post by my friend Jeremiah Dooley that shared his thoughts on this matter. It’s about the importance of hardware in a software defined world!

- I truly believe that VSAN can be disruptive in the market. This doesn’t mean I think all the things in VSAN are designed at it’s best. Every design has it’s tradeoffs to make. One of the things I would have liked more is a true striped architecture rather than mirrored VMDKs.

- I think there can be done a lot more in the throttling/QOS of the data migration in VSAN. We all underestimate the impact too far. Maybe I want the option to go into maintenance mode without enforcing the data migration? Do we need a higher priority for data migration on failure than on maintenance mode?

- This “problem” of redistributing a whole node is not VSAN only! This is an issue for every distributed storage solution and especially when they are combined with virtualization. Every vendor will solve this differently but in essence this is a general storage architecture design issue every vendor has to tackle.

EDIT

After Jim Millard’s remark I have to admit that the amount of nodes needed for failover does differ from what I have used. Apparently that has to do with the witnesses. There seems to be 1 witness file PER FAILOVER LEVEL and they have to be on different hosts. So a failover tolerance of 2 failures would result in 3 mirrored copies and 2 witness files, with a minimum configuration of 5 nodes! It doesn’t really change anything to the idea behind the blogpost. Found the info on Duncan’s blog but can’t find an explanation for that behaviour.

[…] Hans De Leenheer VSAN: THE PERFORMANCE IMPACT OF EXTRA NODES VERSUS FAILURE […]

When considering the least-write-rebuild cost, FTT=2 doesn’t help you any more than FTT=1 after the 1H “wait for the host to reappear” time elapses. FTT=2 also changes the node count more than you think: You cannot enable that policy on a 4-node cluster; it must be 5 (policy requires FTTx2+1 nodes). At that point, you’re up to a 6-node cluster in order to have a “spare” for maintenance purposes; for the same set of VMs in your example, simply spreading the existing VMs with FTT=1 over more nodes further reduces write-burden for policy compliance. More reasonable, however, would be to actively manage the individual VM’s policy from FTT=2 to FTT=1, which would (theoretically) allow the VM to discard the missing components as part of policy re-compliance. That leaves the VMs in an N+1 state, but the admin can slowly migrate them back to N+2 (aka FTT=2) instead of VSAN doing it “all at once” in order to lower the write burden.

The biggest problem with this theory is that it’s untested (you’ve got to have 6 nodes to even contemplate the experiment), and there’s no guarantee that VSAN won’t create a brand-new object copy under the new policy instead of simply editing the metadata to discard the missing component when reducing from FTT=2 to FTT=1.

Yes, I have seen people playing with the idea to overrule FTT=2 to FTT=1 but this is for me one bridge too far. The VMs as such should never be manually (or even scripted) touched for infrastructure/cluster maintenance. As an admin you shouldn’t even know which machines are on what host. You either put a host in maintenance or you don’t. The impact of that action should be handled at that point and nowhere else.

This is a lot simpler situation that people are making it out to be. The old firmware on these controllers is bad. really bad.

Without VSAN I struggled to break 300 IOPS before latency got bad. After I upgraded them and extended the queue depth to 600 magically I could push 20x the IOPS at 1/30th the latency. This is just a case of something getting put on the HCL for “it will work in low IO, and not cause data loss” but should have a giant disclaimer (or Dell should fix their firmware and release the upgraded LSI firmware).

http://thenicholson.com/lsi-2008-dell-h310-vsan-rebuild-performance-concerns/

That said sizing for rebuild impact is not a new concept. Back with RAID 50 EqualLogic arrays I had to explain to customers that they needed to keep in mind that performance for random writes was cut in 1/4 and performance could get a further 25-50% worse during a rebuild so they had to factor that in for their IOPS calculations (and often why going RAID 10 for faster lower impact rebuilds became a better idea).

Thx for stepping in John. You are right. In the end we can tie everything back to an entry-point HBA with wrong firmware. I am planning on an update post today with some more facts on VSAN thatas a few points in my post could lead to wrong conclusions.

Next up in the lab now that I’ve mastered the mystery of the bad HBA. SWITCH TESTING!

I have a Netgear (M7100 and a really cheap one) vs. a Juniper EX2200 vs. Broacde ICX 6610, vs Cisco 3750X vs. Questionable un-managed GB DLINK.

I Know there isn’t a switch HCL, but I feel like before customers start trying to use the cheapest checkbox I want to know how bad/good things get between different switch options.

You bring up a very good point. I have had my share of issues with undersized switches mostly in iSCSI environments and I can only assume a distributed layer like VSAN is going to be worse at that. From a general ruling I think at least internal bandwidth and port memory buffer are the two key focal points. Maybe a good tip for Duncan to start a new poll 🙂

[…] The performance impact of extra nodes vs failure Here is an interesting article about how the number of nodes is impacted by failure. Some good […]