[WARNING] Long read but probably worth it 🙂

It’s been a while since we heard something from Caringo, let alone being it good news … Apparently they know! Which is a good sign to start with. Two weeks ago I got a call that they were launching their 7nd edition of CAStor but it would be seriously rebranded. From now on, CAStor will be SWARM. To reassure you that it’s not just something brand new, they did keep the iteration number.

What you may not know is that CARINGO is branded after it’s Belgian founder Paul Carpentier (CTO) and his two partners Jonathan Ring and Mark Goros, with whom he previously had a success in the early 1990’s developing the first Client Server Middleware, a term they even trademarked back then. Since Paul is Belgian and we had never met before I thought it was about time we did a 1o1. And boy, did I have a good time. I basically got my private 4 hours TechFieldDay session with a CTO of a global storage company, right in my back yard (called Belgium).

In Previous Episodes

Paul Carpentier is also the brains behind FilePool that he sold in 2001 to EMC to later become Centera. It was an instant success as we just had the dotcom bubble bursting and Centera was the first platform that got recognised by the SEC for archiving data because of its unique hashing algorithms. In fact, if you would boot one up today you’d still see FilePool code coming out. After that Paul had a lot of thoughts on how to build FilePool and Centera if he could do it all over again. That’s when he designed the idea of CAStor.

Parity protection

There were a few things just not flexible enough in the Centera solution. One of the examples is that he wanted to get away from a single protection scheme per cluster and thought you could better set that per object. In CAStor you can set the replication scheme to standard 2 copies but easily 3 or 4 copies, or multiple protection schemes for different periods of time.

Erasure, R U Sure?

Until last week I still had CAStor in the back of my mind as the old-school object storage that only did replication based protection which in the end has a lower storage efficiency (== bigger footprint). That has been for example the strength of Cleversafe who designed their own proprietary algorithms to do erasure coding on large object storage. In version 6 Paul added Erasure coding as one of the possible protection schemes for an object. So again: not clusterwide and not as only option. Here are a few reasons why:

- Erasure coding is not a really smart move for smaller clusters. To be node protected you need at least as many nodes as your protection scheme. Example: 16 out of 20 requires 20 nodes minimum.

- Erasure coding is not really suited for a large set of small items. Erasure does require some CPU cycles on every object so it’s better to use a n-copy protection scheme here.

- If you combine the two over time you could do n-copy scheme for the first few months and move to an erasure parity scheme later for durability and footprint reduction.

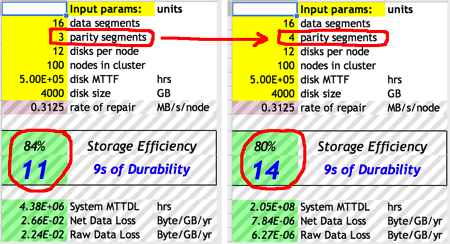

Other than that I think this calculation model Paul gave me says enough about how big the storage efficiency over the durability is on Erasure coded protection. Note: some companies use chunks, others use shards, CARINGO uses segments.

It’s getting dark in here

How about really really old data that I don’t need anymore? There have been a lot of trials at solving this piece of the expensive storage puzzle. The Caringo way of solving this is called Darkive. They basically spin down parts of the cluster, whether it be entire nodes or just disks and dynamically adjust which parts. This way they can keep the data and nodes alive for a very long time, conserve a lot of power and still be available when necessary.

What’s new in SWARM7?

Optimised RAM index

Previously every node had the index of it’s own content in RAM. When a request came in at a node that did not know where the requested object was, it did a multicast to all other nodes and the ones that had it, replied with their payload for it. The client request would then be redistributed to the nodes with the lowest payload. The only problem is that at a certain amount of requests/s, this constant tapping on the shoulder (multicast interrupt) for data that is not yours anyway has an impact on your performance.

In Swarm7 they have added a golden pages index on top of that in every node. If a request comes in now that is not for that specific node it will immediately know who to ask for. So instead of a multicast you’ll get a unicast. This will have a great impact on the scalability of the cluster and the performance of any existing infrastructure.

Massively Parallel Clients to Cluster

Have you ever heard of massively parallel computation clusters that run in RAM? Think about weather simulation models. These calculations may take days and there is no possibility of protecting the data because it doesn’t land on disk. Somehow there are systems that make a point in time of the state of those machines and Caringo now has designed a method to make them write all in parallel to their cluster. Don’t ask me about details because I probably wouldn’t understand how it works 🙂 What I can see is that this can only help optimizing bandwidth hence throughput hence smaller protection windows.

Readonly HADOOP filesystem

Apparently one of the problems of Hadoop is that you need to extract the dataset you need first to be able to do massive calculations on it before you end up with a small dataset of answers. Caringo wrote their own Hadoop Connector, which is basically their own answer to HDFS that provides same map/reduce API results. And yet again I can only see the advantages in massive readings at high speed, shortening another window.

Infrastructure optimizations

There have been quite a few other backend optimizations being added to Swarm7. One of them I heard from Paul was changing some Python based code to C to decrease boot times and other local processes.

What’s next?

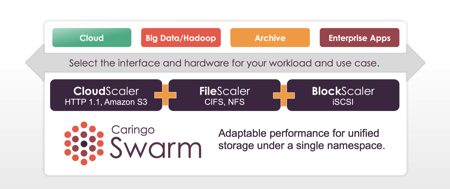

One of the biggest advantages of object based architectures is that they scale massively. One of the downsides is integration with existing platforms. In the end object storage is a language spoken from within an application so the application has to be adjusted to the specific language. It’s not comparable from a usage perspective to file- or block-based storage that is terminated in the operating system and is transparent to applications. Caringo already had a fileserver optimised for their infrastructure. The problem they solved there was that with large scale filesystems, latency increases exponentially when passing millions of objects. The Caringo file-header (SMB/NFS) was specifically designed to have a consistent speed, not necessarily fastest, no matter what the size of the cluster is.

Later this year in Q3 they are adding a technical preview of a block-based iSCSI option as well. It’s definitely going to be a Gen1 option for the people that are really interested. So if you are … I’d say call Paul because they can probably use some corner cases here.

My $0.02

I am definitely happy I took the time to go listen to Paul. At the very least they appeared back on the radar and for the good. I did review my previous opinion that was based on 2 year old knowledge from the times that I got pitched by DELL for the DX6000 series (OEM of CAStor). Caringo is definitely a partner worth considering if you would be looking at other solutions from Cleversafe, Scality, DataDirect Networks, …