At the E2EVC convention – by the way the best geek convention for virtualization people – Aidan Finn (@joe_elway) gave a great presentation on how to design and implement a Windows Scale Out File Server (SOFS) with Windows Storage Spaces. I have already acknowledged in the past that I do love what Microsoft is doing in this space and I would definitely have this in my mind if I was designing a storage system in the field!

I’ve said it before – Microsoft Storage Spaces & Scale Out File Server looks awesome @joe_elway #E2EVC pic.twitter.com/VxmgkkUCTp

— Hans De Leenheer (@HansDeLeenheer) May 31, 2014

However! There is one thing that I have to get out. Windows Scale Out Fileserver is NOT scale out! Luca Dell’Oca and I both had the same comment during Aidan’s presentation and it’s that this looks a lot like HP 3PAR full mesh back-end. Let me explain with some graphics and why I conclude that Windows is using the term scale-out wrong.

On disks and controllers

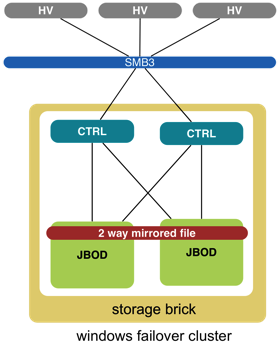

The Windows Scale Out File Server is based on the idea of using shared physical disks to at least 2 storage controllers through SAS or Infiniband. This is the classic way storage arrays are designed in the likes of HP EVA, EMC Clariion, and even some newer architectures like NimbleStorage or PureStorage. Basically you have 2 active/active storage controllers connected to all disks in the backend. The data will practically be 2-way or 3-way mirrored or parity protected. But remember: we are not protecting disks here but data! So forget about RAID please.

How to scale

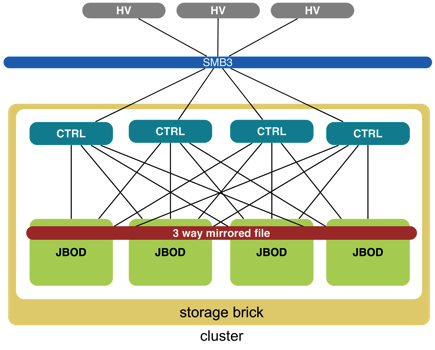

There are two ways of scaling Windows Scale Out File Server: you can connect more JBODS and you can connect more controllers. This is the example Aidan used where we basically have 4 fronted storage controllers (windows servers) connected to 4 JBODS with a total of 240 3,5” SAS disks. We can have the same protection types here and in fact when you select 3-way-morriring it wil put the 3 copies on different nodes so you would even have node-failover reliability. It also provides you the possibility to use Cluster Aware Updating.

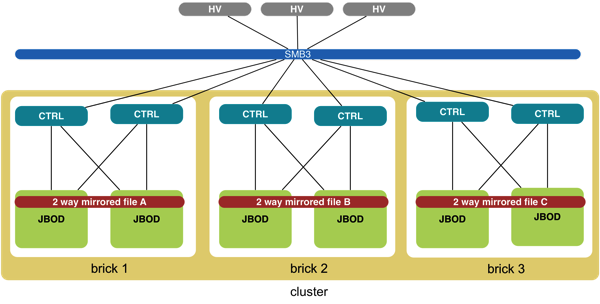

The next step here is that you can put multiple storage bricks in one cluster. Basically a single cluster can have a maximum of 8 storage controllers in any combination. You can have 8 controllers in 1 storage brick, you can have 2 bricks of 3 plus one of 2 or you can have 2×4 / 4×2. Be aware however that a single file (CSV for example) will reside on only one brick! If you want the data to reside on more than one bricks you’ll have to use replication.

The only way this would make sense IMO is if you would physically separate bricks that need to be provided by the same cluster.

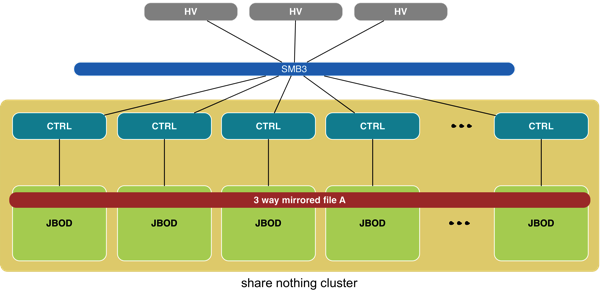

Why is this not Scale Out then?

Scale out is based on shared nothing where every contributor owns it’s own hardware! Every member of a true Scale Out system contributes to the data protection. In a true scale out system there is no file owner. So although Windows Scale Out File Server scales BIG and I would definitely see a lot use in the field … it does not have the right to coin this name for it.

Take a look at the SNIA site at what is considered scale out, the variances and the degree of coupling.

http://www.snia.org/sites/default/education/tutorials/2010/spring/file/NicholasKirsch_Scale_Out_Storage.pdf

Thank you Eric for pitching SNIA’s view on the matter. Does that mean you agree or disagree with my findings or not?

“Scale out is based on shared nothing where every contributor owns it’s own hardware! Every member of a true Scale Out system contributes to the data protection.”

I agree with you, Hans. That’s exactly where Virtual SAN solutions come into play and turn Scale-Out File Server into what it should be. StarWind is one example – that Screencast demonstrates it:

http://www.netometer.com/blog/?p=14

Hi Hans, nice write up. Look at the graphic for the bricks and then at the/your last graphic. Any similarities?

So SOFS scales out on the brick level.

It may not be at the controler level and have some design/implementation gotcha’s, but “it is scale-out” and it is a “file server” 😉

Best regards,

George

It’s as much a Scale-Out FileServer as a Prius is an electric car 😉