When I don’t have everything by hand, I need the support of people who do, to do some FactChecking on my posts. Therefor; thank you Duncan for the feedback. But actually I should have been smarter in the first place. It’s not because I no longer have a homelab that I can’t play with VSAN. So I enrolled the VSAN Hands-On-Lab session again and looked specifically at a few things that were either wrong in my previous post or need elaboration.

Basic Facts

- VSAN maximum cluster size: 32 hosts, which is the same as the maximum vSphere Cluster

- 1SSD per disk group and 1-7 HDDs per disk group

- SSDs do not count in capacity calculation as they are read/write caching devices

- the caching average is advised at 10% of the used capacity* of the host

- vSphere ESXi minimum 5.5 update 1

- works both on vCenter Appliance as vCenter Windows Server

- managed through vSphere Web Client, ESXCLI, RVC (Ruby Virtual Console) or API

- not every member of the cluster needs to contribute to the storage cluster, although it is recommended

- a VSAN cluster is either manual or automatic. In the automatic case it will add every disk that is eligible whenever you add it to a contributing host.

* used capacity: the capacity used inside all VMs. If you have 100 VMs that have 50GB VMDK but only 25GB is used, you should allocate 250GB of flash.

Host failure protection

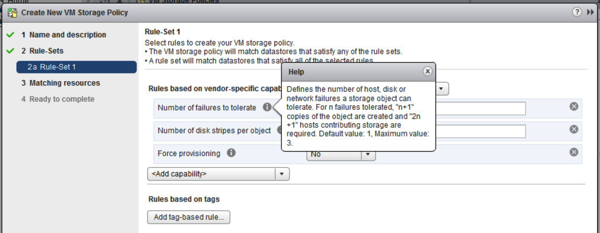

In the N+1 / N+2 examples I used I did not take into account the witnesses and the result of that in cluster configuration. Here’s the explanation of the amount of hosts needed:

Basically this means that in case of an FTT=2, you will need (2 x 2) + 1 so a minimum of 5 hosts. For FTT=3 that’ll be (2 x 3) + 1 = 7.

- Pro: apparently it was easier to add a witness every time you up the protection level to keep the number of votes odd. It’s also easy to calculate and not confusing that you would miss a witness when not necessary.

- Con: IMO in FTT=2 you would already have 3 votes which would make the use of a witness irrelevant and would make a 3-node cluster perfectly possible

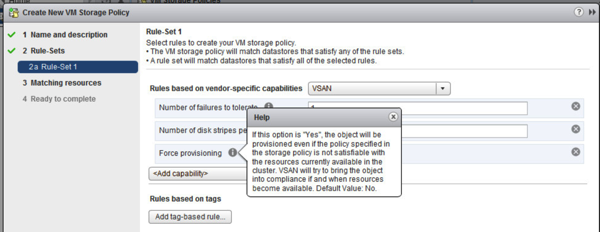

Enforce Provisioning

This basically means that you can force provisioning even in cases that your current infrastructure configuration would not allow that.

- Pro: (temporary) unavailability of resources doesn’t stop you from deploying

- Con: it could give you a false feeling of SLA/security

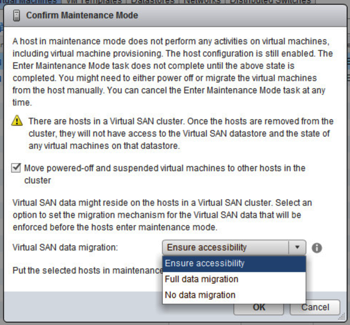

Maintenance Mode

you can’t put your machine in maintenance as that would still initiate that redistribution …

This was wrong! when you put a VSAN host in maintenance you will get the option whether or not you want to migrate the data off or not. On top of that you get the choice whether or not you want to migrate ALL the data off or just to “ensure accessibility”. In case of an object with FTT=0, this would be moved to ensure availability, in case of FTT=1 or more it would not as the object is still available through it’s other copies.

- Pro: all of the above

- Con: none. This is exactly how I wanted it to be

Striping

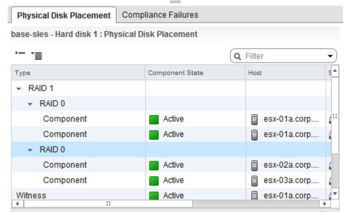

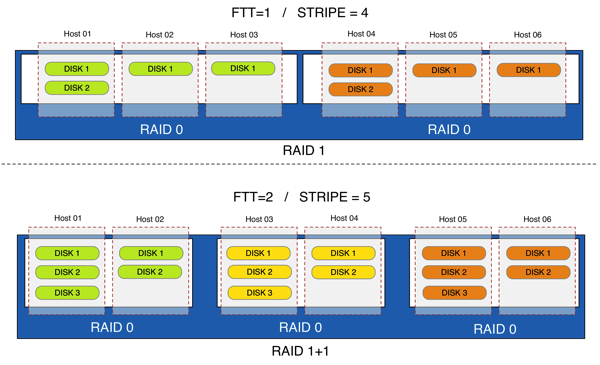

By default an object (for example VMDK) is written in a RAID1 protection model over 1 disk per host. And I am emphasizing on the by default as apparently there is the option to do striping to a maximum of 12. That is 12 stripes, mirrored to 12 other disks. Furthermore any object larger than 255GB will be striped as well to avoid having objects larger than the size of a disk. See what this did to my lab environment when I “upped” the striping from default to 2;

Now this needs a little elaboration. Basically it will start using 1 disk per host and when all hosts are participating and we do not have enough disks for all stripes yet it will start using a second disk. That’s why you see 2 disks being used from Host 1 for the first RAID0 and one from each other host for the second RAID0. Of course there are some smart algorithms in the background that will decide on the initial placement of each stripe to ensure balance in the cluster. In the following example we have a 6 node cluster with 2 different striping and fault tolerance configurations.

- Pro: striping! when your requested data does not reside in cache you could benefit from parallel reading from multiple disks and hosts. you would be spreading the available resources. This would also help on failover rebuild of an entire host, whether or not it is forced upon failure or chosen upon maintenance.

- Con: I have noticed VMware chose to use RAID 1 over 0. This is in contrast with what most storage vendors do which is RAID 0 over 1. The difference is that in a RAID 1 over RAID 0 half of the disks are effected on a single block/disk failure. In a RAID 0 over RAID 1 only the one (or 2) mirrored disks would be affected.

I have seen a witness being created on every host when I started using striping although I still only had a 3-node cluster with FTT=1. If you want to know what happened here you can read this post by Rawlinson Rivera (@PunchingClouds).

QoS – Throttling

Yes, there is a decent level of QoS/Throttling already built in VSAN. The issue we had with the Reddit article was that a QoS/Throttling doesn’t make any sense or use on an HBA with merely a queue depth of 25. Makes complete sense!

My $0.02

I hope this clarifies a few misunderstandings that could be drawn from my previous post. It just shows that the product is indeed a decent Gen1 product and that a lot of the basic features are present even if I was missing them at first glance. This is not just another driver. If you have the knowledge of how to set-up a decent vSphere Cluster you are absolutely smart enough to set-up a VSAN cluster. Choose the right hardware, make the right storage architecture decisions and you’ll be just fine.

That being said … never forget that you are dealing with a distributed storage layer here! The impact of your actions in cluster management are no longer just about some VM’s of which their memory is running on a host. Now you have the actual DATA on that host and probably also the data of VMs that do not reside on that host! This is the same for every hyper-converged solution!

[…] (ESX Virtualization) Why I love VSAN! (Frank Denneman) Is VSAN more affordable? (Hans Deleenheer) VSAN Clustering – Fact Check Update! (Hans Deleenheer) VMware VSAN Launch Q&A (Ivobeerens.nl) VMware’s new VSAN – What Matters […]